The algorithm will examine each labeled training frame and learn a model of appearance for classifying the pixels into any of the specified object classes of interest. Using the popup dialogue box, under areas of interest, enter the areas of interest to label including the red/green/blue values used to mark each region in the training examples. Follow the prompt to select a destination folder for all of the output frames which will be in the form of labeled images using the same color palette as used in the training. Using the popup file explorer dialogue box, select the folder containing the labeled training images for the relevant video sequence.Īnd in the file explorer dialogue box, select the folder containing all of the labeled validation images for the relevant video sequence. Select the folder containing all of the original frames of the video sequence in the popup window. To configure the GUI for training and labeling, select create project and provide the project a name using the popup dialogue box. For automatic pixel labeling of the video sequence, launch the Darwin graphic user interface and click load training labels. When the labeling of a frame is complete, use the same naming convention as for the training, saving the files in a separate validation frames folder. To quantitatively validate the accuracy of the trained classifier, select frames from the original video sequence not already selected to be included in the training set and label the pixels in each frame as just demonstrated for the training frames being as precise and as comprehensive as possible. Once the labeling of a frame is complete, export the overlaid layer as a separate image file taking care that the base filename matches the original frame base filename but with a C appended to the end.

To select the color for the sample area of interest, click and drag pixels within an area to color in a region of sample with the appropriate palette choice.

Eye tracking data analysis software#

Next, open each training frame from the video in the image editing software and for each frame overlay a transparent image layer on the loaded image for labeling and create a color palette providing one color for each given object class of interest. There is no fixed number that is appropriate. When all of the items have been modified, select an appropriate number of training frames to make up the training set. In general, including sufficient training examples depicting visually distinguishing differences of each AOI should be enough for a robust performance. For optimal performance and minimal training requirements, use elements that are easily visually distinguishable from each other to the naked eye and/or that consistently occupy different regions of each video frame. To design an area of interest, select items that are of interest to the study such as trees, shrubs, signposts, buildings, paths, steps.

Then play the films for the participant in a predetermined random order using a desktop eye tracking device to record the participant's eye movements during each video. After seating the participant 60-65 centimeters away from the screen, ask them to imagine being in need of restoration using a sentence that allows the participant to imagine in the context of the eye tracking video. The film sequences should be shown in an eye tracking laboratory in which natural light is available but which can be controlled to avoid reflections on the screen on as large a screen as possible to occupy as much of the visual field, thereby avoiding distractions from outside the field of view. For this type of research, a team approach is essential for there are multiple aspects that require high level input and consideration.ĭemonstrating the procedure with me will be my post graduate student Andrew Treller.

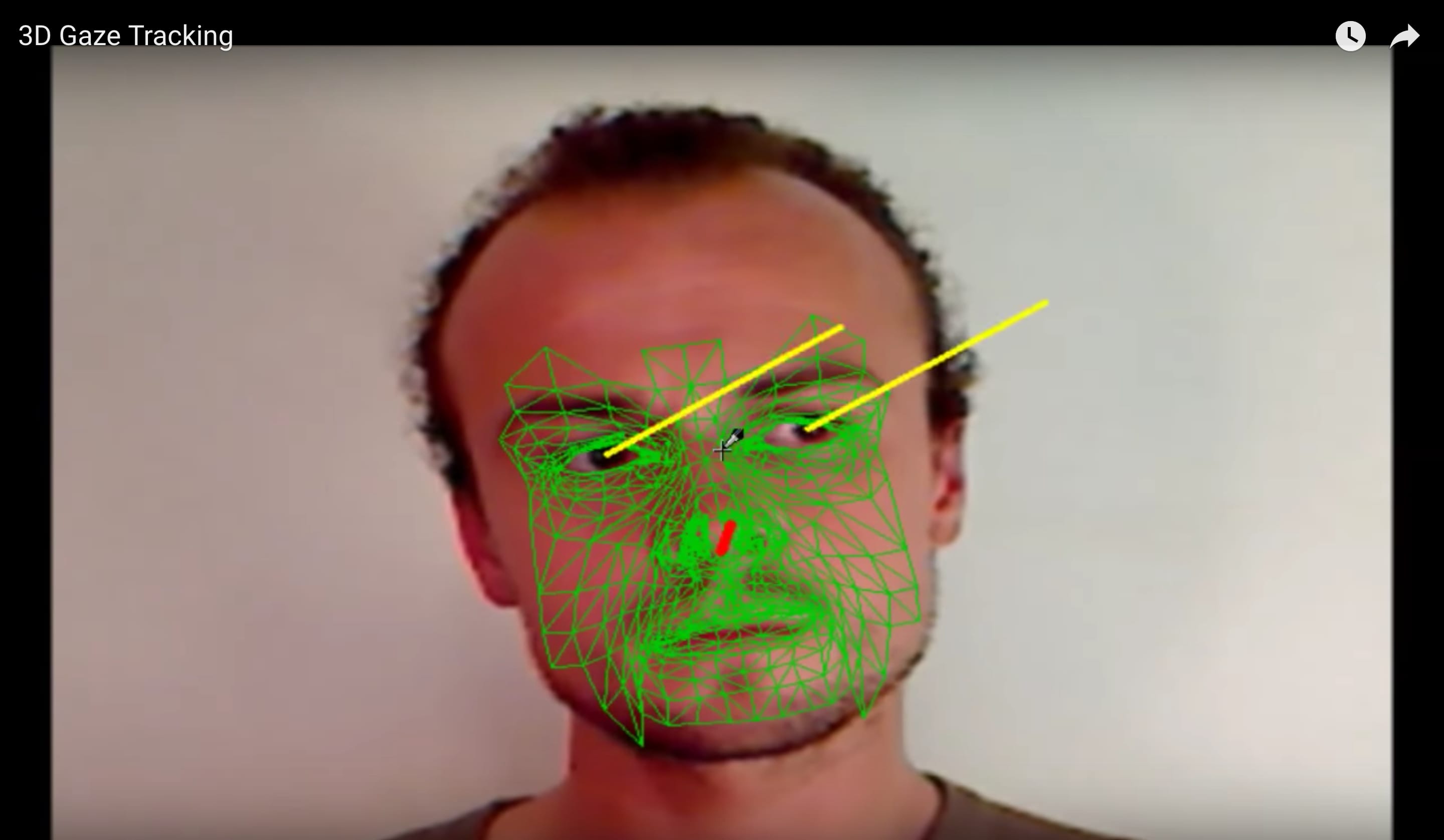

This technique combined with eye tracking could be used to test these assumptions. Landscape studies have relied on understanding how people react to different visual stimuli. This method could be used in many different eye tracking applications particularly in real world situations or those that use video as a stimulus. This analysis technique allows for a much richer and automated approach to analyzing video-based data than currently available methods leading to richer extraction of more complex data. Many eye tracking studies rely on complex video stimuli and real world settings making analysis of the data highly complex.

0 kommentar(er)

0 kommentar(er)